A new study conducted by the non-profit Mozilla Foundation suggests that YouTube continues to recommend harmful videos to users that violate its own content policies.

The crowd-funded study found that around 71% of the videos that viewers considered ‘regrettable’ were recommended by the video platform’s own algorithm.

What Exactly is YouTube Regrettables?

Let’s understand it this way, any content that a user finds disturbing or harmful is deemed as regrettable. For example, some volunteers were often recommended videos titled, ‘Man humiliates feminist’.

Such misogynistic videos would fall under YouTube regrets. Other examples would include Covid misinformations, extreme political inclinations, or hate speeches.

Further Investigation

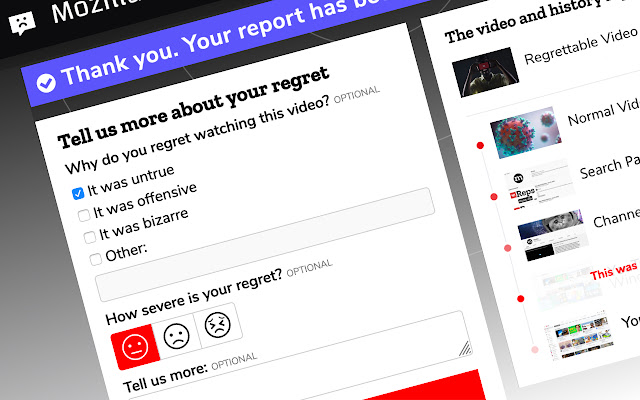

The finding was made using crowdsourced data from RegretsReporter, a browser extension that lets users report information on harmful videos and what actually led them there. More than 30,000 YouTube users used the platform to report the harmful content that was recommended to them.

The investigation further suggests that non-English speakers were more prone to disturbing content and that ‘YouTube regrets’ was 60% higher in countries that don’t have English as their first language. Mozilla says that there were a total of 3362 regrettable videos from 91 countries, between July 2020 and May 2021. Most of these were either misinformation, violent or graphic content, hate/polarization speeches, and scams.

The study also suggests that recommended videos were 40% more likely to be reported by users than videos for which they searched.

Brandy Geurkink, Mozilla’s Senior Manager of Advocacy says that YouTube’s algorithm is designed to misinform and harm people:

“Our research confirms that YouTube not only hosts, but actively recommends videos that violate its very own policies. We also now know that people in non-English speaking countries are the most likely to bear the brunt of YouTube’s out-of-control recommendation algorithm. Mozilla hopes that these findings — which are just the tip of the iceberg — will convince the public and lawmakers of the urgent need for better transparency into YouTube’s AI.”

User Attention

The YouTube algorithm is essentially a black box. The video-streaming platform relies on its algorithm to show users what they like or might like to keep them hooked on for as long as possible.

The study by Mozilla backs up the notion that YouTube’s AI continues to feed users ‘low-grade, divisive, misinformations’. This makes it easier for the video streaming platform to easily grab eyeballs by triggering people’s sense of outrage. This only implies that the problem of regrettable or triggering recommendations is indeed systematic. The more you outrage people, the more you expand your appetite for ad revenues!

As a method to fix YouTube’s algorithm, Mozilla is calling for ‘common sense transparency laws, better oversight, and consumer pressure’. It also called for the protection of independent researchers to empower them to interrogate algorithmic impacts, as an exercise to repair the worst excesses of the YouTube algorithm.

Superficial Claims

YouTube has a history of being accused of fuelling societal ills by feeding hate speech, political extremism, and conspiracy junk. The parent company and tech giant Google has always responded to negative criticism flaring up around algorithms, by announcing few policy tweaks.

The dangerous behavior of YouTube algorithms, only suggests that Google has been making superficial claims of reform.